ID3 (Iterative Dichotomiser 3) in Decision Tree

In decision tree learning, ID3 (Iterative Dichotomiser 3) is an algorithm invented by Ross Quinlan in 1986 used to generate a decision tree from a dataset. ID3 is the precursor to the C4.5 algorithm, and is typically used in the machine learning and natural language processing domains.

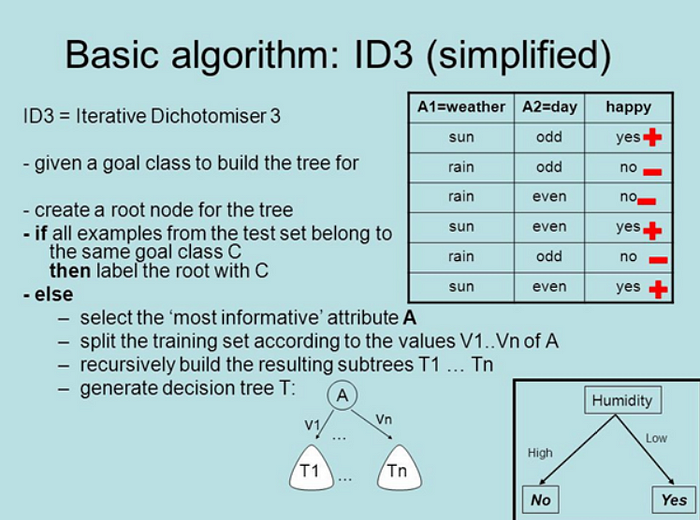

ID3 decision tree algorithm uses Information Gain to decide the splitting points. In order to measure how much information we gain, we can use entropy to calculate the homogeneity of a sample. It uses a top-down greedy approach to build a decision tree.

In simple words, the top-down approach means that we start building the tree from the top and the greedy approach means that at each iteration we select the best feature at the present moment to create a node.

ID3 only work with Discrete or nominal data.

ID3 algorithm iteratively (repeatedly) dichotomizes(divides) features into two or more groups at each step. ID3 follows the principle of Occam’s razor in attempting to create the smallest decision tree possible.

Ockham’s Razor is a logical principle for evaluating competing hypotheses to explain something. The rule only comes into play if there exist multiple competing explanations for something.

ID3 lead to multiway split unlike CART, which can have binary or multiway split based on choice of splitting criteria.

Entropy: — It is a measure of the amount of uncertainty in a data set. Entropy controls how a Decision Tree decides to split the data. It actually affects how a Decision Tree draws its boundaries.

In the case of binary classification (where the target column has only two types of classes) entropy is 0 if all values in the target column are homogenous(similar) and will be 1 if the target column has equal number values for both the classes.

ID3 Steps

- Calculate the Information Gain of each feature.

- Considering that all rows don’t belong to the same class, split the dataset S into subsets using the feature for which the Information Gain is maximum.

- Make a decision tree node using the feature with the maximum Information gain.

- If all rows belong to the same class, make the current node as a leaf node with the class as its label.

- Repeat for the remaining features until we run out of all features, or the decision tree has all leaf nodes.

References : —

https://sid-sharma1990.medium.com/decision-tree-and-its-types-76db66644622

https://sid-sharma1990.medium.com/ensemble-techniques-in-machine-learning-23f6a55faa17